This is a post for all the AWS CLI oneliners that I stumble upon. Note that they will be updated over time.

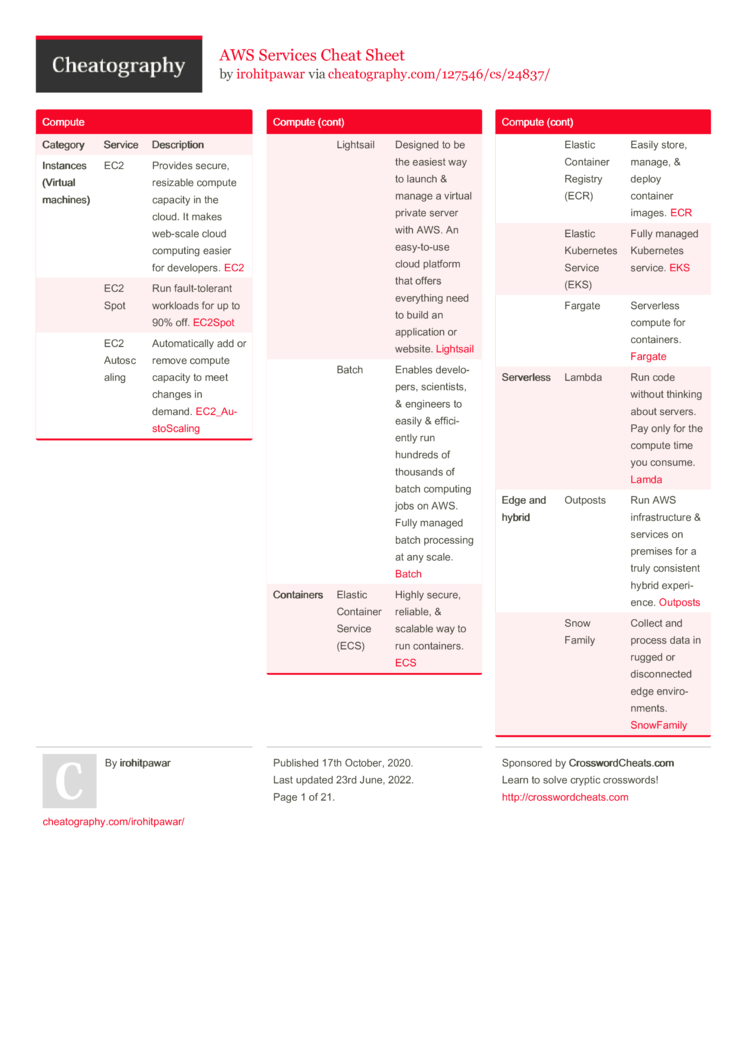

Amazon Web Services Command Line Interface (AWS CLI) - Cheat Sheet I have been standing up quite a bit of infrastructure in AWS lately using the AWS CLI. Here are some commands that I found helpful in a cheat sheet format. Go to your AWS console, Services, Elastic Container Service. Click on Create Repository. Input your repository name and click on Next Step. AWS console will show pretty much the same instructions bellow. Aws Cli Cheat Sheet. Table of contents. Also see; ebextensions Elastic Beanstalk Configuration; Configuration profiles; Homebrew; ECS; S3; EC2; Cheat Sheets. Aws ecs create-cluster -cluster-name=NAME -generate-cli-skeleton aws ecs create-service S3. Aws s3 ls s3://mybucket aws s3 rm s3://mybucket/folder -recursive.

AWS CLI cheatsheet. EC2 aws ec2 describe-instances aws ec2 start-instances -instance-ids i-12345678c aws ec2 terminate-instances -instance-ids i-12345678c S3. The one-page guide to AWS CLI: usage, examples, links, snippets, and more. Devhints.io Edit; AWS CLI cheatsheet. EC2 aws ec2 describe-instances aws ec2 start-instances -instance-ids i-12345678c aws ec2 terminate-instances -instance-ids i-12345678c S3.

RDS

Describe All RDS DB Instances:

Describe a RDS DB Instance with a dbname:

List all RDS DB Instances and limit output:

List all RDS DB Instances that has backups enabled, and limit output:

Describe DB Snapshots for DB Instance Name:

Events for the last 24 Hours:

List Public RDS Instances:

SSM Parameter Store:

List all parameters by path:

Get a value from a parameter:

Thank You

Please feel free to show support by, sharing this post, making a donation, subscribing or reach out to me if you want me to demo and write up on any specific tech topic.

This contains various commands and information that I find useful for AWS work.

Resource:https://docs.aws.amazon.com/cli/latest/userguide/install-cliv2-linux.html

Backup instance manually

- Go to your instance

- Right click and select Image from the dropdown

- Click Create Image

- Give your backup a name and description

- Click No reboot if you want your instance to stay in a running state

- Click Create Image

- At this point you should be able to find the AMI that is associated with your backup under AMIs. Give the AMI a more descriptive name if you'd like.

Resource:https://n2ws.com/blog/how-to-guides/automate-amazon-ec2-instance-backup

Parameter Store location

- Login

- Search for Systems Manager

- Click on Parameter Store in the menu on the left-hand side

Use env vars

Run the following with the proper values:

Set up credentials file

You can run aws configure if you want a guided setup. Alternatively, you can add the following to ~/.aws/credentials:

If you don't opt for the guided setup, don't forget to set the region in ~/.aws/config:

Populate env vars with credentials file

Resource:https://github.com/kubernetes/kops/blob/master/docs/getting_started/aws.md#setup-iam-user

Populate config file with env vars

Multiple profiles

Your credentials file will probably look something like this:

To use the notdefault profile, run the following command:

Use temp credentials

Add the following to your credentials file:

Then run this command:

Resource:https://docs.aws.amazon.com/sdk-for-go/v1/developer-guide/configuring-sdk.html

Use env vars for temp credentials

Run the following with the proper values:

Resource:https://docs.aws.amazon.com/IAM/latest/UserGuide/id_credentials_temp_use-resources.html

List instances

Get number of instances

Resource:https://stackoverflow.com/questions/40164786/determine-how-many-aws-instances-are-in-a-zone

Reboot all instances in a region

Assign an elastic IP to an instance

Create instance with a tag

Resource:https://serverfault.com/questions/724501/how-to-add-a-tag-when-launching-an-ec2-instance-using-aws-clis

Create instance using security group name

List instances with filtering

This example in particular will get you all your m1.micro instances.

List instance by instance id

aws ec2 describe-instances --instance-ids i-xxxxx

Destroy instance

If you want to terminate multiple instances, be sure to use this format:

Get info about a specific AMI by name and output to JSON

aws ec2 describe-images --filters 'Name=name,Values=<AMI Name>' --output json

Get AMI id with some python

This uses the run_cmd() function found in /python-notes/.

Deregister an AMI

Get list of all instances with the state terminated

List all instances that match a tag name and are running

Resources:

https://stackoverflow.com/questions/23936216/how-can-i-get-list-of-only-running-instances-when-using-ec2-describe-tags - filter by running instances

https://serverfault.com/questions/560337/search-ec2-instance-by-its-name-from-aws-command-line-tool - filter by instance name

Alternatively, if you want running instances, change Values=terminated to Values=running.

Get info about an AMI by product-code

This is useful if you have the product code, and want more information (like the image ID). For CentOS, you can get the product code here. I started down this path when I was messing around with the code in this gist for automatically creating encrypted AMI's.

Resize ec2 instance

Show available subnets

Attach volume at root

Resource:https://stackoverflow.com/questions/37142357/error-instance-does-not-have-a-volume-attached-at-root-dev-sda1

List snapshots

Pretty decent, relatively up-to-date tutorial on using CodeBuild and CodeCommit to autobuild AMI's: https://aws.amazon.com/blogs/devops/how-to-create-an-ami-builder-with-aws-codebuild-and-hashicorp-packer/

If you want to use the gist to create encrypted AMI's mentioned above, be sure to specify aws_region, aws_vpc, aws_subnet, and ssh_username in the variables section.

CodeCommit

You like the idea of CodeCommit? You know, having git repos that are accessible via IAM?

How about using it in your EC2 instances without needing to store credentials? Really cool idea, right? Bet it's pretty easy to setup too, huh? Ha!

Well, unless you know what to do, then it actually is. Here we go:

Build the proper IAM role

- Login to the UI

- Click on IAM

- Click Roles

- Click Create role

- Click EC2, then click Next: Permissions

- Search for CodeCommit, check the box next to AWSCodeCommitReadOnly

- Click Next: Tags

- Give it some tags if you'd like, click Next: Review

- Specify a Role name, like CodeCommit-Read

- Click Create role

Now we're cooking. Let's test it out by building an instance and not forgetting to assign it the CodeCommit-Read IAM role. You can figure this part out.

Cloning into a repo

Once you've got a working instance:

- Access it via ssh into it

sudo su- Install the awscli with pip:

pip install awscli - Run this command and be sure to change the region to match the one you're working with:

- Run this command and be sure to change the region to match the one you're working with:

git config --system credential.https://git-codecommit.us-west-2.amazonaws.com.UseHttpPath true - Run this command and be sure to change the region to match the one you're working with:

aws configure set region us-west-2

At this point, you should be able to get clone into your repo: git clone https://git-codecommit.us-west-2.amazonaws.com/v1/repos/GREATREPONAME

Resources:

https://jameswing.net/aws/codecommit-with-ec2-role-credentials.html

https://stackoverflow.com/questions/46164223/aws-pull-latest-code-from-codecommit-on-ec2-instance-startup - This site got me to the above site, but had incomplete information for their proposed solution.

Integrating this in with CodeBuild

To get this to work with CodeBuild for automated and repeatable builds, I needed to do a few other things. Primarily, take advantage of the Parameter Store. When I was trying to build initially, my buildspec.yml looked something like this (basically emulating the one found in here):

However, I was getting this obscure error message about authentication, and spent several hours messing around with IAM roles, but didn't have any luck. At some point, I eventually decided to try throwing a 'parameter' in for the AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY. This worked great, but I noticed that whenever I tried the build again, I would run into the same issue as before. To fix it, I had to modify the buildspec.yml to look like this (obviously the values you have for your parameter store may vary depending on what you set for them):

At this point, everything is working consistently with the IAM role mentioned previously being specified in the packer file (this is a snippet):

Get the information for a particular parameter (this will give you the encrypted value if the parameter is a SecureString): aws ssm get-parameter --name <nameofparam>

Aws Rds Cli Cheat Sheet

List parameters

Access a parameter

Encrypt your pem file:

Remove the encryption:

Resource:https://security.stackexchange.com/questions/59136/can-i-add-a-password-to-an-existing-private-key

Set up aws cli with pipenv on OSX

List buckets

List files in a bucket

Download bucket

Resource:https://stackoverflow.com/questions/8659382/downloading-an-entire-s3-bucket/55061863

Copy file down

Copy file up

Copy folder up

Resource:https://coderwall.com/p/rckamw/copy-all-files-in-a-folder-from-google-drive-to-aws-s3

Cheatsheet

Set up S3 IAM for backup/restore

Storing aws credentials on an instance to access an S3 bucket can be a bad idea. Let's talk about what we need to do in order to backup/restore stuff from an S3 bucket safely:

Create Policy

- Go to IAM

- Policies

- Create Policy

- Policy Generator, or copy and paste JSON from here (YOLO) into Create Your Own Policy. This is the one I used:

Create a Role

- Go to Roles in IAM

- Click Create role

- Select EC2

- Select EC2 again and click Next: Permissions

- Find the policy you created previously

- Click Next: Review

- Give the Role a name and a description, click Create role

Assign the role to your instance

This will be the instance that houses the service that requires a backup and restore service (your S3 bucket).

- In EC2, if the instance is already created, right click it, Instance Settings, Attach/Replace IAM Role

- Specify the IAM role you created previously, click Apply.

Set up automated expiration of objects

This will ensure that backups don't stick around longer than they need to. You can also set up rules to transfer them to long term storage during this process, but we're not going to cover that here.

From the bucket overview screen:

- Click Management

- Click Add lifecycle rule

- Specify a name, click Next

- Click Next

- Check Current version and Previous versions

- Specify a desired number of days to expiration for both the current version and the previous versions, click Next

- Click Save

Mount bucket as local directory

Warning, this is painfully slow once you have it set up.

Follow the instructions found on this site.

Then, run this script:

Copy multiple folders to bucket

Resource:https://superuser.com/questions/1497268/selectively-uploading-multiple-folders-to-aws-s3-using-cli

Read from specific directory in s3 bucket in an ec2 instance

Create IAM role to grant read access to the bucket

- If accessing from an ec2 instance, find your ec2 instance in the web UI, right click it -> Security -> Modify IAM Role. Otherwise, just open the IAM console

- Click Roles -> Create role

- Click EC2

- Click Next: Permissions

- Click Create policy

- Click JSON

- Copy the json from here:

- Change

awsexamplebucketto the name of your bucket and click Review policy - Specify a Name for the policy and click Create policy

Attach IAM instance profile to ec2 instance

- Open the Amazon EC2 console

- Click Instances

- Click the instance you want to access the s3 bucket from

- Click Actions in the upper right-hand side of the screen

- CLick Security -> Modify IAM role

- Enter the name of the IAM role created previously

- Click Save

To download files from the S3 bucket, follow the steps at the top of the page under INSTALL LATEST VERSION OF AWS CLI ON LINUX to get the AWS cli utils in order to grab stuff from the bucket.

Resources:

https://aws.amazon.com/premiumsupport/knowledge-center/ec2-instance-access-s3-bucket/ - Set up IAM and attach it to ec2 instance

https://stackoverflow.com/questions/6615168/is-there-an-s3-policy-for-limiting-access-to-only-see-access-one-bucket - IAM policy used

Create session

Resource:https://stackoverflow.com/questions/30249069/listing-contents-of-a-bucket-with-boto3

List buckets with boto3

Resource: https://stackoverflow.com/questions/36042968/get-all-s3-buckets-given-a-prefix

Show items in an s3 bucket

List Users

Resource:https://stackoverflow.com/questions/46073435/how-can-we-fetch-iam-users-their-groups-and-policies

Get account id

Create ec2 instance with name

Resources:

https://blog.ipswitch.com/how-to-create-an-ec2-instance-with-python

https://stackoverflow.com/questions/52436835/how-to-set-tags-for-aws-ec2-instance-in-boto3

http://blog.conygre.com/2017/03/27/boto-script-to-launch-an-ec2-instance-with-an-elastic-ip-and-a-route53-entry/

Allocate and associate an elastic IP

Allocate existing elastic IP

Resource:

https://boto3.amazonaws.com/v1/documentation/api/latest/guide/ec2-example-elastic-ip-addresses.html

http://blog.conygre.com/2017/03/27/boto-script-to-launch-an-ec2-instance-with-an-elastic-ip-and-a-route53-entry/

Wait for instance to finish starting

Resource:

https://stackoverflow.com/questions/46379043/boto3-wait-until-running-doesnt-work-as-desired

Get Credentials

Resource:

https://gist.github.com/quiver/87f93bc7df6da7049d41

Get region

Get role-name

The role name will be listed here.

Resource:https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/ec2-instance-metadata.html

Get Account ID

Get public hostname

Resource:https://shapeshed.com/jq-json/#how-to-find-a-key-and-value

Stand up EC2 Instance

This accounts for the exceptionally annoying message: An error occurred (VPCIdNotSpecified) when calling the RunInstances operation: No default VPC for this user that does not have any solutions in sight unless you do some deep diving. Essentially this means that a default VPC isn't defined and subsequently you need to provide a subnet id:

Resources:

https://docs.aws.amazon.com/sdk-for-go/v1/developer-guide/ec2-example-create-images.html - starting point

https://gist.github.com/stephen-mw/9f289d724c4cfd3c88f2

https://stackoverflow.com/questions/50289221/unable-to-create-ec2-instance-using-boto3 - where I found some of the solution (for boto, which translated to this fortunately)

https://docs.aws.amazon.com/sdk-for-go/api/aws/#StringSlice

Stand up EC2 Instance with lambda

Modify the previous code to make it into a lambda function - find it here.

Next you'll need to get the binary for the function:

Aws Cli Command Cheat Sheet

With that, you'll need to zip it up:

At this point, you need to create the iam role:

- Navigate to https://console.aws.amazon.com/iam/home#/roles

- Click Create role

- Click Lambda

- Click Next: Permissions

- Add the following policies:

- Click Next: Tags

- Give it a Name tag and click Next: Review

- Give it a Role name such as 'LambdaCreateEc2Instance'

- Click Create role

- Once it's completed, click the role and copy the Role ARN

Now, you'll need to run the following command to create the lambda function:

Lastly, you'll need to populate all of the environment variables. To do this, you can use this script:

Alternatively, you can set the values in the lambda UI by clicking Manage environment variables:

but this gets very tedious very quickly.

If you want to throw all of this into a Makefile to streamline testing, you could do something like this:

Run the whole thing with this command:

At this point, you can go ahead and invoke the lambda function to see if everything is working as expected:

This can of course be determined by looking at your running EC2 instances and seeing if there's a new one that's spinning up from your invoking the lambda function.

Resources:

https://www.alexedwards.net/blog/serverless-api-with-go-and-aws-lambda#setting-up-the-https-api - doing all the lambda cli stuff and making a lambda function with golang

https://medium.com/appgambit/aws-lambda-launch-ec2-instances-40d32d93fb58 -doing the web UI stuff

https://docs.aws.amazon.com/lambda/latest/dg/configuration-envvars.html - setting the env vars programatically

https://www.softkraft.co/aws-lambda-in-golang/ - fantastic in-depth guide for using Go with Lambda

CORS with lambda and API Gateway

Want to do AJAX stuff with your lambda function(s)? Cool, you're in the right place.

- Open your gateway

- Click Actions -> Enable CORS

- Check the boxes for POST, GET, and OPTIONS

- Input the following for Access-Control-Allow-Headers:

- Input the following for Access-Control-Allow-Origin:

- Click Enable CORS and replace existing CORS headers

For Options Method

Open the Method Response and click the arrow next to 200. Add the following headers:

For GET Method

Be sure to add the appropriate headers to your APIGatewayProxyResponse:

Next, open the Method Response and click the arrow next to 200. Add the following headers:

For POST Method

Open the Method Response and click the arrow next to 200. Add the following header:

Finishing touches

Finally, be sure to click Actions and Deploy API when you're done

Resource:https://docs.aws.amazon.com/apigateway/latest/developerguide/how-to-cors-console.html

Return Response for API Gateway

You have two options here:

or alternatively:

Resources:

https://github.com/serverless/examples/blob/master/aws-golang-simple-http-endpoint/hello/main.go - used to figure out the first option

Update function via CLI

This is useful to run after updating your code. This will grab main.zip in the current directory:

Resource:https://stackoverflow.com/questions/49611739/aws-lambda-update-function-code-with-jar-package-via-aws-cli

Use serverless framework

This framework makes it easier to develop and deploy serverless resources, such as AWS Lambda Functions.

To start we'll need to install the Serverless Framework:

Then we will need to create the project with a boilerplate template. A couple of examples:

From here, you need to populate the serverless.yml template. This will use the lambda code from above that deploys ec2 instances:

it will also create the API gateway, IAM role and DynamoDB table.

Modify the Makefile if you'd like. The one I like to use can be found right below.

Next, compile the function and build it:

Resources:

https://www.serverless.com/blog/framework-example-golang-lambda-support - Lambda + Golang + Serverless walkthrough

https://marcelog.github.io/articles/aws_lambda_start_stop_ec2_instance.html - useful information for IAM actions needed for ec2 operations

https://forum.serverless.com/t/missing-required-key-tablename-in-params-error/4492/5 - how to set the dynamodb iam permissions

https://forum.serverless.com/t/deleting-table-from-dynamodb/3837 - how to delete a database or retain it

More useful Makefile

Move your functions into a functions folder in the repo for the serverless work.

Next, change the Makefile to the following:

This will output all function binaries into the bin/ directory at the top level of your project.

Resources:

https://github.com/serverless/examples/blob/master/aws-golang-auth-examples/Makefile - super useful Makefile example

Decode Error Message from CloudWatch Logs

Resource:https://aws.amazon.com/premiumsupport/knowledge-center/aws-backup-encoded-authorization-failure/

Secrets Manager

Aws Cli Dynamodb Cheat Sheet

Create IAM role to grant read access to a secret

- If accessing from an ec2 instance, find your ec2 instance in the web UI, right click it -> Security -> Modify IAM Role. Otherwise, just open the IAM console

- Click Roles -> Create role

- Click EC2

- Click Next: Permissions

- Click Create policy

- Click JSON

- Copy the json from here:

- Change

<your secret ARN>to the proper value of your secret, which you can find in the Secrets Manager UI and click Review policy - Specify a Name for the policy and click Create policy

Resource:https://docs.aws.amazon.com/mediaconnect/latest/ug/iam-policy-examples-asm-secrets.html

Get secret from secrets manager and output to file

Resource:https://stackoverflow.com/questions/50911540/parsing-secrets-from-aws-secrets-manager-using-aws-cli

Create new secret from a file

Resource:https://docs.aws.amazon.com/cli/latest/reference/secretsmanager/create-secret.html

Add access key and secret access key as secrets

Aws Cli Commands Pdf

List secrets

Update secret from a file

CloudTrail

Find when an ec2 instance was terminated

This will require you to have the instance id of the terminated instance and a rough sense of the day that it was terminated.

- Open the CloudTrail service

- Click Event history

- Select Event name from the dropdown

- Input

TerminateInstances - Search for the terminated instance id under the Resource name column

Resource:https://aws.amazon.com/premiumsupport/knowledge-center/cloudtrail-search-api-calls/

IAM

Create user

Create access keys for a user

Resource:https://github.com/kubernetes/kops/blob/master/docs/getting_started/aws.md#setup-iam-user